Overview

In the demanding world of healthcare, professionals often face significant emotional challenges. These challenges can be exacerbated by administrative burdens that detract from the quality of patient care. It's crucial to address these concerns with effective strategies that not only enhance operational efficiency but also uplift the spirits of those providing care.

Best practices for AI evaluation in healthcare begin with defining clear objectives. Engaging diverse stakeholders ensures that all voices are heard, fostering a collaborative environment. Utilizing established frameworks provides a solid foundation for implementation, while investing in staff training empowers healthcare providers to navigate these changes confidently.

Moreover, establishing continuous feedback mechanisms creates a supportive loop where concerns can be addressed promptly. By integrating these strategies, healthcare organizations can significantly enhance care quality and operational efficiency. This approach also alleviates some of the emotional burdens faced by providers, allowing them to focus more on their patients.

As we move forward, let us commit to these practices. Together, we can create a healthcare environment that not only meets operational goals but also nurtures the well-being of those who care for us. What steps can you take today to support this vision?

Introduction

In the rapidly evolving landscape of healthcare, the integration of artificial intelligence (AI) presents both promise and challenges. Many healthcare providers feel overwhelmed, striving to enhance patient care through advanced technologies. This journey can often feel daunting, but a thorough evaluation of AI systems is essential.

This evaluation process is not just about assessing effectiveness and safety; it also addresses the ethical considerations that matter deeply to both patients and providers. How can we ensure that these technologies align with our core values? By exploring key factors such as:

- Clinical outcomes

- User experience

- Adherence to ethical standards

organizations can navigate the complexities of AI implementation with confidence.

While the path toward effective AI evaluation in healthcare is fraught with challenges, it also presents unprecedented opportunities. Imagine transforming patient care and operational efficiency in ways we have only dreamed of. Together, we can embrace this journey, ensuring that the integration of AI serves to uplift and enhance the healthcare experience for everyone involved.

Understanding AI Evaluation in Healthcare

The process of AI evaluation in medical settings is not just a technical necessity; it’s a compassionate commitment to enhancing patient care. This evaluation encompasses the assessment of effectiveness, safety, and ethical implications of AI technologies deployed in clinical environments. It is essential to ensure that these systems not only provide precise outcomes but also align with the values and requirements of both service providers and individuals.

A comprehensive evaluation framework should incorporate several key factors:

- Clinical Outcomes: The primary goal of is to improve patient care. Evaluating the effectiveness of AI in impacting clinical outcomes is essential. Recent studies suggest that 89% of medical practitioners believe AI will accelerate processes, highlighting the potential for improved efficiency in treatment management. Moreover, AI's predictive analytics capabilities can help forecast disease progression and identify individuals at higher risk of developing certain conditions. By analyzing patient data and patterns, AI algorithms can provide early warnings, enabling preventive measures that lead to better patient outcomes and reduced costs associated with advanced treatments. CosmaNeura's solutions exemplify this proactive approach.

- User Experience: The integration of AI tools must consider the user experience of medical providers. A positive user experience can lead to higher adoption rates and better outcomes. For instance, a case study titled "Doctors' Confidence in AI" revealed that pathologists exhibited the highest confidence in AI's capabilities, while skepticism remains among specialties like psychiatry and radiology. This suggests that specialty-specific evaluations are necessary to address varying levels of acceptance.

- Moral Standards: Following moral guidelines is crucial, particularly in faith-oriented medical environments. Assessing AI technologies from the perspective of moral consequences ensures that they correspond with the moral obligations of service providers. This is particularly relevant as 91% of physicians express the need for AI-generated materials to be validated by medical experts before being utilized in clinical decisions. By ensuring that ethical standards are met, the effectiveness of predictive analytics in improving health outcomes can be significantly enhanced.

By understanding these elements, medical organizations can make informed decisions regarding AI implementation that ultimately improve care and operational efficiency. Notably, as of December 2023, only 25% of medical executives had adopted generative AI solutions, indicating a significant opportunity for growth and improvement in AI evaluation practices. This adoption rate emphasizes the significance of incorporating predictive analytics into clinical environments to enhance outcomes as the landscape of medical services continues to change.

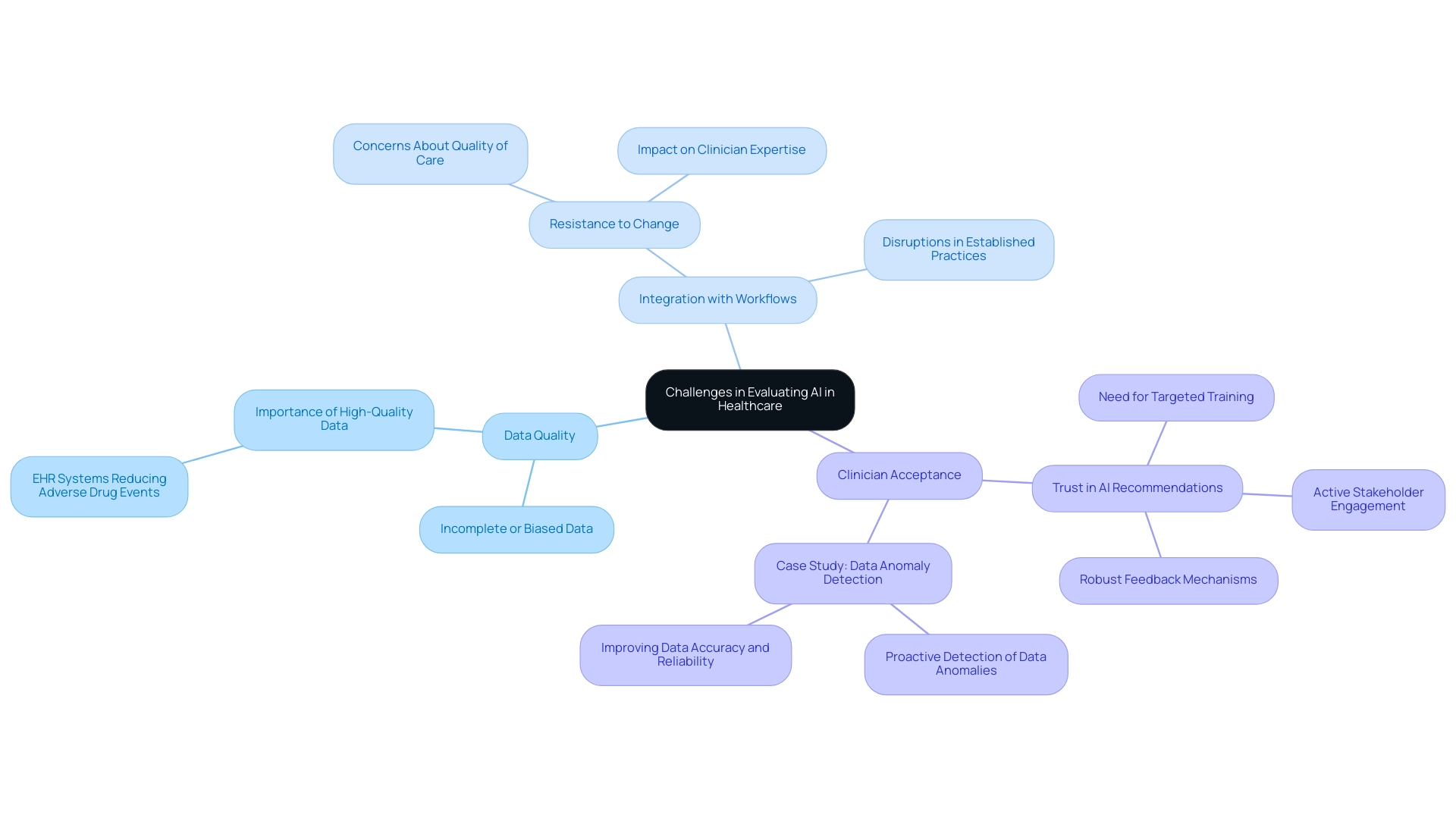

Challenges in Evaluating AI in Healthcare Settings

Assessing AI in medical environments presents a complex array of challenges that healthcare providers must navigate, particularly regarding data quality, integration with existing workflows, and clinician acceptance. One significant hurdle is the prevalence of incomplete or biased data, which can severely compromise the accuracy of AI predictions. For instance, a study published in the Journal of the American Medical Informatics Association highlighted that Electronic Health Record (EHR) systems have significantly reduced adverse drug events in hospitals. This underscores the critical role of high-quality data in enhancing the safety of individuals.

Moreover, integrating AI systems into established clinical workflows can lead to disruptions, provoking resistance among medical staff. This resistance is particularly pronounced when clinicians feel that AI recommendations may undermine their expertise or alter their established practices. As noted in recent discussions, a significant part of the medical field is resistant to innovation, with doctors frequently voicing concerns about the potential effects on the quality of care and interactions with individuals.

A recent survey revealed that only 25% of medical executives had implemented generative AI solutions, indicating a widespread hesitance to adopt these technologies. This hesitance, as noted by the Keragon Team, reflects a deeper concern about the integration of AI in patient care.

Clinician acceptance is paramount; professionals in the field must trust AI recommendations to leverage them effectively in patient care. Overcoming this barrier necessitates a comprehensive strategy that includes targeted training, active stakeholder engagement, and robust feedback mechanisms. For instance, a case study on utilizing machine learning for data anomaly detection demonstrated that proactively identifying unusual patterns in medical data not only improved data quality but also fostered clinician confidence in AI applications.

This case study illustrates the challenges faced by medical startups like CosmaNeura in earning clinician trust and acceptance. As we look to the upcoming years, it is vital to assess whether AI can revolutionize medicine while addressing key concerns.

Additionally, contemplating the moral implications of AI integration is essential. Adhering to Catholic teachings in medical services offers a unique perspective on the integration of AI in , ensuring that technological advancements align with moral and ethical standards.

Ultimately, addressing these challenges is essential for the successful evaluation and implementation of AI in medical settings. By prioritizing data integrity and clinician involvement, providers can enhance the reliability of AI predictions, leading to improved outcomes and more efficient operations. Together, we can navigate these complexities, fostering a supportive environment where both technology and compassion thrive.

Frameworks and Methodologies for Effective AI Evaluation

In the ever-evolving landscape of healthcare, assessing can feel daunting. Organizations are encouraged to adopt a multi-dimensional approach that encompasses technical performance, usability, and clinical impact. The AI for IMPACTS framework stands out as a valuable guide, highlighting the significance of real-world outcomes and stakeholder feedback in the assessment process. This framework not only enables a thorough evaluation of AI tools but also aligns with the ethical standards essential in healthcare settings, especially as Cosmonauts work to revolutionize medical practice management and enhance care quality in harmony with Catholic healthcare principles.

Continuous improvement is vital in this journey. By incorporating iterative assessment processes, AI systems can adapt to the ever-changing clinical needs of healthcare providers. This adaptability is crucial, ensuring that the technologies remain relevant and effective in enhancing patient care. For instance, a recent study revealed that variables in the final model accounted for 89% of the variance in clinical outcomes, underscoring the importance of robust evaluation methodologies.

Success stories abound, demonstrating that the adoption and integration of AI systems in medical settings hinge on clear evidence of their effectiveness in real-world contexts. The case study titled 'Adoption and Integration of AI in Healthcare' illustrates how showcasing the practical utility of AI models fosters acceptance among clinicians and patients alike, ultimately leading to improved service delivery. As Kathrin Cresswell, PhD, aptly states, "Learning from the wealth of existing HIT evaluation experience will help patients, professionals, and wider health care systems."

By utilizing these comprehensive frameworks, service providers can systematically assess AI technologies, ensuring informed decisions regarding their implementation. This strategic approach not only enhances operational efficiency but also reinforces the commitment to principled practices in alignment with Catholic values. It emphasizes the collaborative effort behind the Delphi process, bringing together expert clinicians and IT professionals to create a brighter future for patient care.

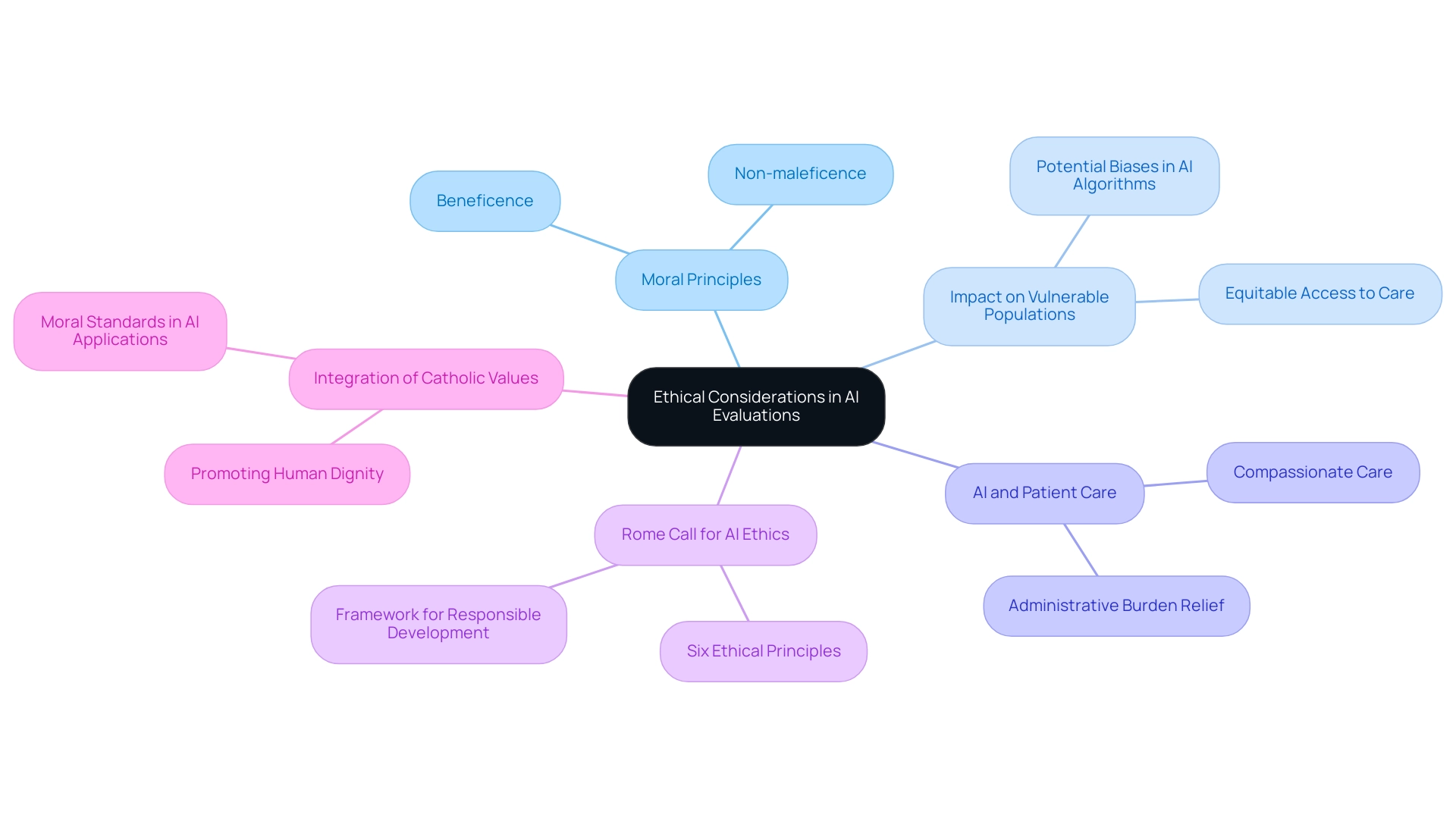

Ethical Considerations in AI Evaluations: Aligning with Catholic Values

Aligning AI assessments with moral principles is vital for medical providers who adhere to Catholic teachings. This alignment not only respects individual dignity but also promotes equitable access to care, embodying the principles of beneficence and non-maleficence. Are we truly aware of the potential biases in AI algorithms, especially regarding their impact on vulnerable populations?

By incorporating ethical principles into the assessment process, medical organizations can harness AI technologies to improve care for individuals while remaining true to their moral and ethical obligations. Imagine a healthcare environment where technology enhances compassion and respect in patient interactions.

At CosmoNauts, we recognize that the medical system was not initially designed with the welfare of doctors and individuals in mind. Our commitment to quality evaluation drives our success in healthcare AI solutions, ensuring that we continuously assess the effectiveness of our innovations. For instance, our AI-driven scheduling tool significantly alleviates administrative burdens on physicians, allowing them to focus more on meaningful interactions with clients.

The Rome Call for AI Ethics (RCAIE), established in February 2020, serves as a pivotal framework guiding the responsible development of AI tools. This document, endorsed by influential organizations, outlines six principles emphasizing respect for human dignity and the promotion of the common good. Such frameworks are essential for ensuring that AI systems are developed transparently and inclusively, ultimately benefiting all members of the human family, especially the most vulnerable.

Moreover, expert opinions highlight the necessity of embedding Catholic values within AI systems. This involves promoting the dignity of individuals and ensuring that AI applications do not undermine moral standards. For example, AI technologies can be designed to enhance patient interactions, ensuring that care remains compassionate and respectful.

As Pope Francis observed, 'The risk of man being ‘technologized’, rather than ‘technology humanized’' underscores the importance of ensuring that technology serves humanity and upholds moral standards. Integrating these moral considerations into AI evaluations not only aligns with Catholic teachings but also empowers service providers to offer care that is both innovative and morally responsible. By prioritizing moral standards, medical organizations can navigate the complexities of AI technology while reinforcing their commitment to patient-centered care.

While AI technology offers possibilities for advancing the common good, it also presents risks that necessitate careful moral reflection. How can we address both the benefits and challenges of AI to ensure our use of technology aligns with our mission to provide ? By engaging in these reflections, medical providers can ensure that their technological advancements truly serve the needs of those they care for.

Continuous Improvement: Iterating AI Evaluation Processes

Continuous improvement in is essential for navigating the ever-evolving landscape of medical services. Many startups encounter resistance to innovation when engaging with doctors, which can be disheartening. To address this, organizations must establish robust feedback loops that facilitate real-time assessment of AI evaluation and user experiences. This approach not only helps in quickly identifying areas needing improvement but also ensures that AI technologies remain closely aligned with clinical goals and individual needs.

Regular audits and updates to AI systems are critical in this context. By systematically reviewing performance metrics and user feedback, providers can uncover insights that drive innovation and operational excellence. For instance, organizations that have successfully integrated AI into their continuous improvement processes, as highlighted in the case study "Best Practices for Integrating AI into Continuous Improvement," have reported significant advancements in efficiency and patient care outcomes. They achieved this by outlining clear objectives and fostering collaboration.

Fostering a culture of continuous learning and adaptation is vital. This involves training staff to engage effectively with AI tools and encouraging collaboration across departments to share insights and best practices. By prioritizing transparency, fairness, and inclusivity in AI evaluation, as advancements in software suggest, providers can minimize bias and enhance the overall effectiveness of their AI applications.

Moreover, integrating real-time assessment strategies allows for immediate adjustments based on performance data, ensuring that AI systems evolve alongside clinical practices. As the medical sector increasingly embraces AI, a commitment to continuous improvement will be a key differentiator. This commitment enables providers to maximize the benefits of AI while mitigating potential risks. Notably, CosmaNeura stands out as the only company creating AI solutions for the billion-dollar faith-focused medical market, reinforcing the importance of ethical considerations in AI applications.

As Isaac Asimov aptly stated, "A robot may not injure a human being or, through inaction, allow a human being to come to harm." This underscores the necessity of adhering to human values in AI development, reminding us of our responsibility to prioritize patient safety and care.

Best Practices for Implementing AI Evaluations in Healthcare

To implement successful AI evaluations in healthcare, organizations should adhere to several best practices:

- Define Clear Objectives: Establish specific goals for AI utilization that align with clinical outcomes. This ensures that the technology serves to enhance care effectively, addressing the emotional challenges faced by providers.

- Engage Stakeholders: Actively involve a diverse group of stakeholders, including clinicians, administrative staff, and patients, in the assessment process. This engagement is crucial for gathering varied perspectives and fostering a sense of ownership over being implemented. Statistics indicate that stakeholder engagement significantly enhances the effectiveness of AI evaluations, with studies showing a negative predictive value of 0.84 in relevant applications.

- Utilize Established Evaluation Frameworks: Employ recognized frameworks to guide the assessment of AI technologies. These frameworks facilitate a thorough analysis, ensuring that all relevant factors are considered in the evaluation process.

- Invest in Staff Training: Provide comprehensive training for medical personnel to improve their understanding and acceptance of AI technologies. This investment not only enhances operational efficiency but also empowers staff to leverage AI tools effectively in their daily practices, alleviating some of the burdens they face.

- Establish Continuous Feedback Mechanisms: Implement systems for ongoing feedback to monitor AI performance and adapt to changing medical needs. Regularly re-evaluating AI models with fresh data is essential to maintain their relevance and accuracy over time.

By adhering to these best practices, healthcare providers can effectively conduct AI evaluations of systems, resulting in enhanced care quality and operational efficiency. For instance, the University Medical Center Utrecht's development of the 'Sleep Well Baby' model illustrates the importance of quality management in AI applications, establishing a blueprint for safe AI usage in clinical settings. Furthermore, Google Health emphasizes that "AI is poised to transform medicine, delivering new, assistive technologies that will empower doctors to better serve their patients."

Involving stakeholders not only enhances the assessment process but also aligns AI initiatives with the needs and expectations of those directly affected by them. Reflecting on these practices can lead to a more compassionate and effective healthcare environment.

Future Directions: Evolving AI Evaluation in Healthcare

As AI technologies continue to advance, it’s vital that the evaluation methods accompanying them evolve as well. Healthcare providers often face emotional and operational challenges, and future pathways for AI evaluation in medical services are increasingly focused on integrating advanced analytics. This shift enables real-time measurement of AI efficacy, which not only enhances the precision of assessments but also allows for timely adjustments based on performance metrics. By addressing these urgent issues, we can tackle disjointed care and inefficiencies in medical delivery, including rising costs and physician burnout.

Moreover, establishing robust ethical frameworks is essential to guide the responsible application of AI, ensuring that patient care remains the utmost priority. With the help of generative AI and other innovative technologies, medical practitioners, like CosmaNeura, can streamline workflows and reduce administrative burdens. This ultimately leads to improved provider efficiency and better outcomes for clients.

Integrating client feedback into assessment metrics is another crucial aspect of this journey. By actively seeking input from individuals, medical organizations can gain invaluable insights into the effectiveness of AI applications, fostering a more patient-centered approach to care. This aligns with the need to bridge gaps in medical access and enhance the overall user experience.

Furthermore, it’s important for providers to stay attuned to emerging trends, such as generative AI and machine learning. This awareness ensures that assessment practices remain relevant and effective. The impact of advanced analytics on AI evaluation performance cannot be overstated. These analytics offer a deeper understanding of AI capabilities, paving the way for more informed decision-making and improved patient outcomes.

As medical service providers embrace these future directions, they will not only enhance their AI evaluation processes but also contribute to a more effective and ethical healthcare landscape. Additionally, the importance of well-structured training data cannot be overlooked, as it is crucial to prevent overfitting and ensure predictive accuracy in AI models. A case study highlighting business owners' perspectives on Chat GPT reveals significant support for AI's potential to enhance customer interactions and decision-making processes, which resonates with its applications in medical settings.

Notably, 30% of business owners anticipate that AI will generate website copy, reflecting a broader expectation of AI's impact on operational efficiency—an expectation that can be similarly anticipated in healthcare. Furthermore, the unsuccessful influenza prediction model by Google underscores the necessity of stable training data in developing , reinforcing the need for robust evaluation processes.

In this evolving landscape, how can we ensure that AI serves as a tool for compassion and efficiency in healthcare? Let’s continue to explore these possibilities together, fostering a future where technology and care go hand in hand.

Conclusion

The integration of artificial intelligence in healthcare presents a transformative opportunity, yet it requires careful evaluation to ensure that these technologies truly enhance patient care while adhering to ethical standards. Considerations in this evaluation process include:

- Assessing clinical outcomes

- User experience

- The ethical implications of AI systems

By focusing on these elements, healthcare organizations can navigate the complexities of AI implementation with confidence, ultimately improving operational efficiency and patient outcomes.

Despite the challenges posed by data quality and clinician acceptance, a structured approach to AI evaluation can pave the way for successful integration. Adopting established frameworks, engaging stakeholders, and prioritizing continuous improvement are critical best practices that can drive innovation and enhance the effectiveness of AI applications in clinical settings. Moreover, aligning AI evaluations with ethical standards, particularly in faith-based healthcare environments, ensures that technology serves to uplift patient dignity and promote equitable access to care.

As the landscape of healthcare continues to evolve, the commitment to rigorous evaluation processes must remain steadfast. By embracing advanced analytics and incorporating real-time feedback, healthcare providers can adapt their AI strategies to meet the changing needs of patients and clinicians alike. This proactive approach not only fosters trust and acceptance among healthcare professionals but also positions organizations at the forefront of a new era in patient-centered care.

The journey toward effective AI evaluation and implementation is not merely a technological challenge; it is an essential commitment to enhancing the healthcare experience for all stakeholders involved. Let us embrace this opportunity together, ensuring that our efforts in AI lead to a brighter, more compassionate future for healthcare.